src

p82

“Modern objectivity mixes rather than integrates disparate components” which each have their own history and are conceptually distinct

Noninterventionist aka mechanical objectivity = one element of our current notion of objectivity

mechanical objectivity combats “the subjectivity of scientific and aesthetic judgment, dogmatic system building, and anthropomorphism.”

“texts so laconic that they threaten to disappear entirely”

mechanical objectivity in science “attemps to eliminate the mediating presence of the observer”

p83

“the vastness and variety of nature require that observations be endlessly repeated”

mechanical objectivity “is a vision of scientific work that glorifies the plodding reliability of the bourgeois rather than the moody brilliance of the genius.”

mechanized vs moralized science; these two seemingly disparate types are actually closely related

p85

“The atlas aims to make nature safe for science; to replace raw experience— the accidental, contingent experience of specific individual objects— with digested experience.”

p86

“the faithful drawing, like nature, outlives ephemeral theories— a standing reproach to all who would, whether ‘by their error or bad faith,’ twist a fact to fit a theory.”

drawings (in atlases) were in this way ^ one of the first signs of mechanical objectivity to come (in mid/late 1800s)

“in order to decide whether an atlas picture is an accurate rendering of nature, the atlas maker must first decide what nature is.”

– the problem of choice:

1. “which object should be presented as the standrd phenomena of the discipline…”

2. “…and from which viewpoint?”

p87

“the mere idea of an archetype in general implies that no particular animal can be used as our point of comparison; the particular can never serve as a pattern for the whole”

p88

“the observer never sees the pure phenomenon with his own eyes; rather, much depends on his mood, the state of his senses, the light, air, weather, the physical object, how it is handled, and a thousand other circumstances.”

– the experience of an object derived from an individual instance (not the archetype which is derived by a series of individual experiences) is entirely dependent on the environment, nothing is experienced within a vacuum

before mechanical objectivity the observer had to manually distill the typical from the variable and accidental

– this is how archetypes were formed

two major variants of “typical” images:

1. ideal = not merely the typical but the “perfect”

2. charactristic = locates the typical in an individual

these 2 variants had different ontologies and aesthetics

p89

the ideal as “the best pattern of nature”

p90

Bernhard Albinus’s pictures of skeletons “are pictures of an idel skeleton, which may or may not be realized in nature, and of which this particular skeleton is at best an approximation.”

“nature is full of diversity, but science cannot be. [the atlas maker] must choose [their] images, and [their] principle of choice is frankly normative”

– example of normative choice: Albinus picked a male skeleton of “middle stature” without blemish or deformity, with pleasing proportions, etc… he took artistic liberties to make the image of the ideal skeleton “more perfect”

p91

“Whenever the artist alone, without the guidance and instruction of the anatomist, undertakes the drawing, a purely individual and partly arbitrary representation will be the result, even in advanced periods of anatomy. Where, however, this individual’s drawing is executed carefully and under the supervision of an expert anatomist, it becomes effctive through its individual truth, its harmony with nature, not only for purposes of instruction, but also for the development of anatomic science; since this norm, which is no longer individual but has become ideal, can only be attained through an exact knowledge of the countless peculiaritis of which it is the summation.”

– an expert (not just an artist) is required to make an accurate “ideal” image because one must know what’s peculiar and what’s common about the object/phenomena in order to address/eliminate/correct/alter it

p93

“Hunter, like Albinus, considered the beauty of the depiction [(aesthetic judgement)] to be part and parcel of achieving that accuracy, not a seduction to betray it.”

“scientific naturalism and the cult of individuating detail long antedated the technology of the photograph.”

naturalism ≠ rejection of aesthetics

p95

“statistical essentialism”

p96

mid 19th century atlases (scientific imaging) marked transition from “truth to nature” via the typical (ideal, charactristic exemplar, average) to mechanical objectivity

“the aesceticism of noninterventionist objectivity” (withholding internal aesthetic judgement)

“the dangers of excessive accuracy”

p98

“images of individuals came to be preferred to those of types, and … techniques of mechanical reproduction seemed to promise scientists salvation from their own worst selves” (late 1800s)

Objectivity & Mechanical Reproduction

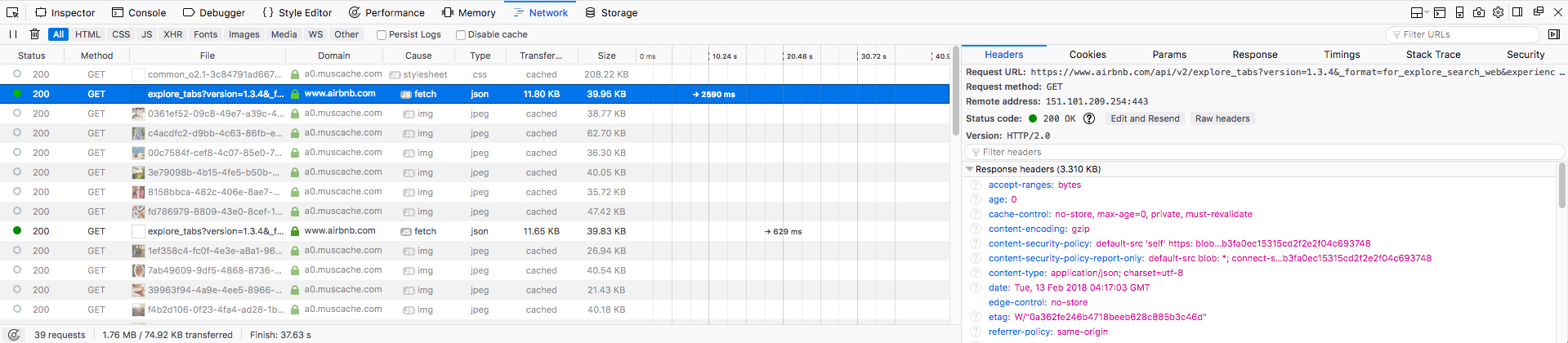

“photographic depiction entered the fray along with X-rays, lithographs, photoengravings, camera obscura drawings, and ground glass tracings as attempts— never wholly successful— to extirpate human intervention between object and representation.”

“Interpretation, selectivity, artistry, and judgment itself all came to appear as subjective temptations requiring mchanical or procedural safeguards.”

“th image, as standard bearer of objectivity, is inextricably tied to a relentless search to replace individual volition and discretion in depiction by the invariable routines of mechanical reproduction.”

p100

“the reproduction of nature by man will never be a reproduction and imitation, but always an interpretation… since man is not a machine and is incapable of rendering objects mechanically.”

p103

“what charactrized the creation of late nineteenth-century pictorial objectivism was *self*-surveillance, a form of self-control at once moral and natural-philosophical.”

p107

“the most a picture could do was to serve as a signpost, announcing that this or that individual anatomical configuration stands in the domain of the normal.”

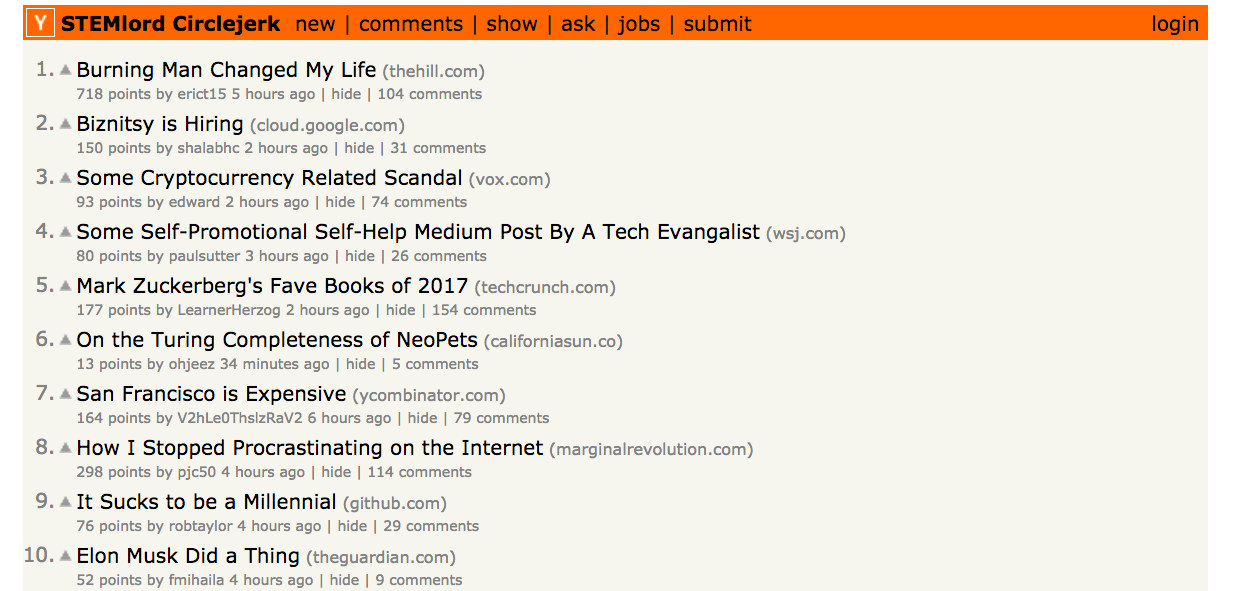

“while in the early ninetenth century, the burden of representation was supposed to lie in the picture itself, now it fell to the audience. the psychology of pattern recognition in the audience had replaced the metaphysical claims of the author.”

p109

“Caught between the infinite complexity of variation and their commitment to the representation of individuals, the authors must cede to the psychological. Selection and distillation, previously among the atlas writer’s principle tasks, now were removed from the authorial domain and laid squarely in that of the audience. Such a solution preserved the purity of individual representation at the cost of acknoeldging the essential *role* of the reader’s response: the human capacity to render judgement, the electroencephalographers cheerfully allow, is ‘exceedingly servisable.'”

– judgement becomes the role of the reader not the atlas maker

p110

“the X-ray operator ither by wilfulness of negligence in fastening the plate and making the exposure may exaggerate any existing deformity and an unprejudiced artist should be insisted upon.”

p111

“It is not that a photograph has more resemblance than a handmade picture, but that our belief guarantees its authenticity… We tend to trust the camera more than our own eyes.”

p112

“Photographs lied.”

“The photograph… did not end the debate over objectivity; it entered the debate.”

Attempts to make photographs (x-rays in this case) achieve 100% truth to nature by viewer interpretation:

– “demand witnesses to the production of the image”

– “require experts to mediate betwen picture and the public”

– “recommend that the surgeons themselves learn the techniques necessary to eliminate their dependence on the intermediate readers.”

This is a problem of expertise. In order for data to be turned into information a data analyst needs to vet the data then the communication designer needs to translate it for a given audience. The layperson doesn’t have time to search through datasets and adopt these areas of expertise themselves.

What if an area of expertise existed which sought to supply laypeople with the tools to be their own expert?

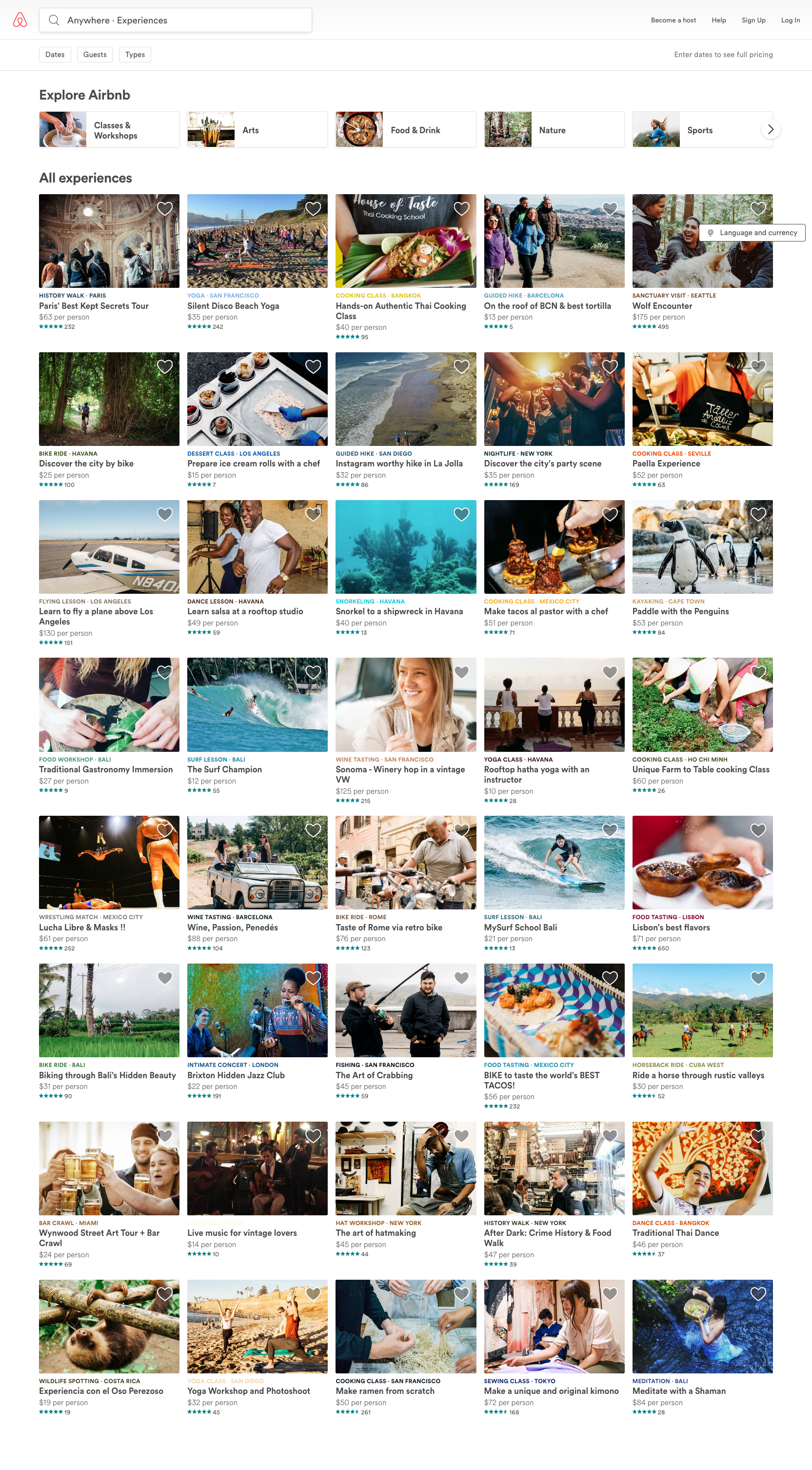

– Can an interface be built for a dataset that would let the user turn the data into knowledge themselves?

– What role or impact on the data would the interface designer have?

– How would this impact be different than that of an infographic designer, which expresses one specific point using chery-picked data from the larger set?

– More generalized audience (?)

– Not cherry-picked data, not one point to be made with the data

– Modes of viewing/understanding the dataset would still be under complete control of the designer. The designer would still have to make decisions regarding *how* the data *should* be explored by designing-in specific user experiences.

“Knowledge obtained by long experience and positive indications is far more valuable than any representation visible alone to the eye.”

– me doing research for this project, becoming an expert on the topic of truth-to-nature in scientific imaging

– what’s the significance of this?

– make the point of the importance of expertise and raise the question of whether it can be appropriated temporarily or “handed-off” to the end-recipient of the selected information

p117

“authenticity before mere similarity” – the benefit/offering of mechanical objectivity

Objectivity Moralized

“no atlas maker could dodge the responsibility of presenting figures that would teach the reader how to recognize the typical, the ideal, the characteristic, the average, or the normal.”