In the spirit of Halloween and current political events, Marco and I decided to build a talking cyborg Trump head. The head is meant to detect someone’s presence in front of it and speak. We’ve been having problems hooking up the audio playback, and although it is currently not perfected (an amp is needed to increase the output volume, and a solution to the SPI chip selection causing an unresponsive servo has yet to be found), this is it’s current state:

Mr Cyborg Trump provocatively speaks a line originally spoken from Mrs Clinton: “Apologizing is a great thing, but you have to be wrong. I will absolutely apologize sometime, in the hopefully distant future, if I’m ever wrong.”

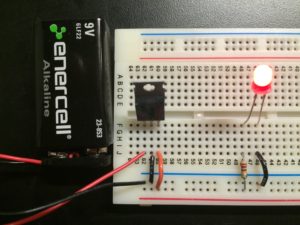

Parts

- Rubber Trump mask

- Stuffing (cotton, bubble wrap)

- Arduino UNO

- IR motion sensor

- 8 ohm speaker

- Servo motor

- Micro SD card reader and 1gb micro SD card

- 9v battery pack

Code (Arduino)

#include <SD.h>

#include <pcmConfig.h>

#include <pcmRF.h>

#include <TMRpcm.h>

#include <SPI.h>

#define SD_ChipSelectPin 4

TMRpcm audio;

//using a O O G resistor (3.3 ohms) for IR sensor

//dont delay less than 250 between openMouth & closedMouth

#include <Servo.h>

Servo mouth;

int speakerPin = 3; //digital out

int servoPin = 2; //digital out

int sensorPin = A0; //analog in

int closedMouth = 45;

int openMouth = 180;

int sensorStrength = 500;

unsigned long lengthOfPhrase = 9000; //in milliseconds

unsigned long timePhraseBegin = 0;

boolean movementDetected = false;

boolean speakOnce = false;

void setup() {

audio.speakerPin = 9;

Serial.begin(9600);

mouth.attach(servoPin);

mouth.write(closedMouth);

if (!SD.begin(SD_ChipSelectPin)) { // see if the card is present and can be initialized:

Serial.println("SD fail");

return; // don't do anything more if not

} else {

Serial.println("SD success");

}

audio.setVolume(5);

}

void loop() {

unsigned long timePassed = millis() - timePhraseBegin;

int sensorRead = analogRead(sensorPin);

if(sensorRead > sensorStrength){

if(!movementDetected){

Serial.println("ON.");

timePhraseBegin = millis();

}

movementDetected = true;

} else {

if(movementDetected && timePassed >= lengthOfPhrase){

Serial.println("OFF.");

movementDetected = false;

speakOnce = false;

}

}

if(movementDetected){

//move mouth

mouth.write(openMouth);

delay(250);

mouth.write(closedMouth);

delay(250);

//speak

if(!speakOnce){

audio.play("0000.wav");

speakOnce = true;

}

} else {

//close mouth

mouth.write(closedMouth);

delay(250);

}

}